-

创建者:

虚拟的现实,上次更新时间:7月 02, 2025 需要 6 分钟阅读时间

1. 前言

大部分的情况下我们可以通过 ollama 来加载其自带的模型,但如果我们所需的模型 ollama 没有就需要我们自己来 finetune 微调后的模型,这里我们选择使用 Llama.cpp 来量化自己的模型为 Ollama 可以运行的格式。

下载模型的方式有多种,国内的环境可以参考D003-节省时间:AI 模型靠谱下载方案汇总 的魔塔下载方式。

1.1. 下载 phi-2 模型

docker pull python:3.12-slim

docker run -d --name=downloader -v `pwd`:/models python:3.12-slim tail -f /etc/hosts

sed -i 's/snapshot.debian.org/mirrors.tuna.tsinghua.edu.cn/g' /etc/apt/sources.list.d/debian.sources

sed -i 's/deb.debian.org/mirrors.tuna.tsinghua.edu.cn/g' /etc/apt/sources.list.d/debian.sources

pip config set global.index-url https://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple

pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

pip install modelscope

pip install huggingface_hub

python -c "from modelscope import snapshot_download;snapshot_download('AI-ModelScope/phi-2', cache_dir='./models/')"

2. 构建新版本的 llama.cpp

2.1. 配置系统基础环境

sed -i 's/snapshot.debian.org/mirrors.tuna.tsinghua.edu.cn/g' /etc/apt/sources.list.d/debian.sources sed -i 's/deb.debian.org/mirrors.tuna.tsinghua.edu.cn/g' /etc/apt/sources.list.d/debian.sources pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple apt update

2.2. 下载并编译 llama.cpp

- 下载代码

- 切换工作目录

- 常规模式构建 llama.cpp

git clone https://github.com/ggerganov/llama.cpp.git --depth=1 cd llama.cpp apt install cmake build-essential git cmake -B build cmake --build build --config Release # 如果你是 macOS,希望使用 Apple Metal GGML_NO_METAL=1 cmake --build build --config Release # 如果你使用 Nvidia GPU apt install nvidia-cuda-toolkit -y cmake -B build -DGGML_CUDA=ON cmake --build build --config Release

2.3. 通过 llama.cpp 转换模型

apt install python3-full pip install numpy pyyaml torch safetensors transformers sentencepiece ./convert_hf_to_gguf.py /data/models/models/AI-ModelScope/phi-2/ ./convert_hf_to_gguf.py /data/models/models/LLM-Research/Meta-Llama-3___1-8B-Instruct/

INFO:hf-to-gguf:Loading model: Meta-Llama-3___1-8B-Instruct

INFO:gguf.gguf_writer:gguf: This GGUF file is for Little Endian only

INFO:hf-to-gguf:Exporting model...

INFO:hf-to-gguf:rope_freqs.weight, torch.float32 --> F32, shape = {64}

INFO:hf-to-gguf:gguf: loading model weight map from 'model.safetensors.index.json'

INFO:hf-to-gguf:gguf: loading model part 'model-00001-of-00004.safetensors'

INFO:hf-to-gguf:token_embd.weight, torch.bfloat16 --> F16, shape = {4096, 128256}

INFO:hf-to-gguf:blk.0.attn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.0.ffn_down.weight, torch.bfloat16 --> F16, shape = {14336, 4096}

INFO:hf-to-gguf:blk.0.ffn_gate.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.0.ffn_up.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.0.ffn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.0.attn_k.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.0.attn_output.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.0.attn_q.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.0.attn_v.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.1.attn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.1.ffn_down.weight, torch.bfloat16 --> F16, shape = {14336, 4096}

INFO:hf-to-gguf:blk.1.ffn_gate.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.1.ffn_up.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.1.ffn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.1.attn_k.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.1.attn_output.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.1.attn_q.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.1.attn_v.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.2.attn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.2.ffn_down.weight, torch.bfloat16 --> F16, shape = {14336, 4096}

INFO:hf-to-gguf:blk.2.ffn_gate.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.2.ffn_up.weight, torch.bfloat16 --> F16, shape = {4096, 14336}

INFO:hf-to-gguf:blk.2.ffn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.2.attn_k.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.2.attn_output.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.2.attn_q.weight, torch.bfloat16 --> F16, shape = {4096, 4096}

INFO:hf-to-gguf:blk.2.attn_v.weight, torch.bfloat16 --> F16, shape = {4096, 1024}

INFO:hf-to-gguf:blk.3.attn_norm.weight, torch.bfloat16 --> F32, shape = {4096}

INFO:hf-to-gguf:blk.3.ffn_down.weight, torch.bfloat16 --> F16, shape = {14336, 4096}

需要注意:torch 这个组件比较大,接近 700M

2.4. 验证转换后模型

通过以下的指令验证转换后的模型

./build/bin/llama-lookup-stats -m /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf

root@debian:/tmp/llama.cpp-master# ./build/bin/llama-lookup-stats -m /data/models/models/

added_tokens.json generation_config.json NOTICE.md

AI-ModelScope/ .gitattributes README.md

.cache/ LICENSE SECURITY.md

CODE_OF_CONDUCT.md LLM-Research/ ._____temp/

config.json merges.txt

root@debian:/tmp/llama.cpp-master# ./build/bin/llama-lookup-stats -m /data/models/models/AI-ModelScope/phi-2/

added_tokens.json .msc

CODE_OF_CONDUCT.md .mv

config.json NOTICE.md

configuration.json phi-2.8B-2-F16.gguf

generation_config.json README.md

LICENSE SECURITY.md

.mdl special_tokens_map.json

merges.txt tokenizer_config.json

model-00001-of-00002.safetensors tokenizer.json

model-00002-of-00002.safetensors vocab.json

model.safetensors.index.json

root@debian:/tmp/llama.cpp-master# ./build/bin/llama-lookup-stats -m /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf

build: 0 (unknown) with cc (Debian 12.2.0-14) 12.2.0 for x86_64-linux-gnu

llama_model_loader: loaded meta data with 31 key-value pairs and 453 tensors from /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf (version GGUF V3 (latest))

也可以通过指令对模型“跑分”测试

./build/bin/llama-bench -m /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf

root@debian:/tmp/llama.cpp-master# ./build/bin/llama-bench -m /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf

| model | size | params | backend | threads | test | t/s |

| ------------------------------ | ---------: | ---------: | ---------- | ------: | ------------: | -------------------: |

| phi2 3B F16 | 5.18 GiB | 2.78 B | CPU | 6 | pp512 | 62.43 ± 1.72 |

phi2 3B F16 5.18 GiB 2.78 B CPU 6 tg128 3.58 ± 0.00

或者使用 simple 程序,来完成上面两个命令的“打包操作”

./build/bin/llama-simple -m /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf

main: decoded 17 tokens in 5.21 s, speed: 3.26 t/s

llama_perf_sampler_print: sampling time = 0.60 ms / 18 runs ( 0.03 ms per token, 30201.34 tokens per second)

llama_perf_context_print: load time = 23402.49 ms

llama_perf_context_print: prompt eval time = 446.55 ms / 4 tokens ( 111.64 ms per token, 8.96 tokens per second)

llama_perf_context_print: eval time = 4758.15 ms / 17 runs ( 279.89 ms per token, 3.57 tokens per second)

llama_perf_context_print: total time = 28169.79 ms / 21 tokens

2.5. llama.cpp 量化模型

llama.cpp 支持的量化模型可以参考官网文件 https://github.com/ggerganov/llama.cpp/blob/master/examples/quantize/quantize.cpp

static const std::vector<struct quant_option> QUANT_OPTIONS = {

{ "Q4_0", LLAMA_FTYPE_MOSTLY_Q4_0, " 4.34G, +0.4685 ppl @ Llama-3-8B", },

{ "Q4_1", LLAMA_FTYPE_MOSTLY_Q4_1, " 4.78G, +0.4511 ppl @ Llama-3-8B", },

{ "Q5_0", LLAMA_FTYPE_MOSTLY_Q5_0, " 5.21G, +0.1316 ppl @ Llama-3-8B", },

{ "Q5_1", LLAMA_FTYPE_MOSTLY_Q5_1, " 5.65G, +0.1062 ppl @ Llama-3-8B", },

{ "IQ2_XXS", LLAMA_FTYPE_MOSTLY_IQ2_XXS, " 2.06 bpw quantization", },

{ "IQ2_XS", LLAMA_FTYPE_MOSTLY_IQ2_XS, " 2.31 bpw quantization", },

{ "IQ2_S", LLAMA_FTYPE_MOSTLY_IQ2_S, " 2.5 bpw quantization", },

{ "IQ2_M", LLAMA_FTYPE_MOSTLY_IQ2_M, " 2.7 bpw quantization", },

{ "IQ1_S", LLAMA_FTYPE_MOSTLY_IQ1_S, " 1.56 bpw quantization", },

{ "IQ1_M", LLAMA_FTYPE_MOSTLY_IQ1_M, " 1.75 bpw quantization", },

{ "TQ1_0", LLAMA_FTYPE_MOSTLY_TQ1_0, " 1.69 bpw ternarization", },

{ "TQ2_0", LLAMA_FTYPE_MOSTLY_TQ2_0, " 2.06 bpw ternarization", },

{ "Q2_K", LLAMA_FTYPE_MOSTLY_Q2_K, " 2.96G, +3.5199 ppl @ Llama-3-8B", },

{ "Q2_K_S", LLAMA_FTYPE_MOSTLY_Q2_K_S, " 2.96G, +3.1836 ppl @ Llama-3-8B", },

{ "IQ3_XXS", LLAMA_FTYPE_MOSTLY_IQ3_XXS, " 3.06 bpw quantization", },

{ "IQ3_S", LLAMA_FTYPE_MOSTLY_IQ3_S, " 3.44 bpw quantization", },

{ "IQ3_M", LLAMA_FTYPE_MOSTLY_IQ3_M, " 3.66 bpw quantization mix", },

{ "Q3_K", LLAMA_FTYPE_MOSTLY_Q3_K_M, "alias for Q3_K_M" },

{ "IQ3_XS", LLAMA_FTYPE_MOSTLY_IQ3_XS, " 3.3 bpw quantization", },

{ "Q3_K_S", LLAMA_FTYPE_MOSTLY_Q3_K_S, " 3.41G, +1.6321 ppl @ Llama-3-8B", },

{ "Q3_K_M", LLAMA_FTYPE_MOSTLY_Q3_K_M, " 3.74G, +0.6569 ppl @ Llama-3-8B", },

{ "Q3_K_L", LLAMA_FTYPE_MOSTLY_Q3_K_L, " 4.03G, +0.5562 ppl @ Llama-3-8B", },

{ "IQ4_NL", LLAMA_FTYPE_MOSTLY_IQ4_NL, " 4.50 bpw non-linear quantization", },

{ "IQ4_XS", LLAMA_FTYPE_MOSTLY_IQ4_XS, " 4.25 bpw non-linear quantization", },

{ "Q4_K", LLAMA_FTYPE_MOSTLY_Q4_K_M, "alias for Q4_K_M", },

{ "Q4_K_S", LLAMA_FTYPE_MOSTLY_Q4_K_S, " 4.37G, +0.2689 ppl @ Llama-3-8B", },

{ "Q4_K_M", LLAMA_FTYPE_MOSTLY_Q4_K_M, " 4.58G, +0.1754 ppl @ Llama-3-8B", },

{ "Q5_K", LLAMA_FTYPE_MOSTLY_Q5_K_M, "alias for Q5_K_M", },

{ "Q5_K_S", LLAMA_FTYPE_MOSTLY_Q5_K_S, " 5.21G, +0.1049 ppl @ Llama-3-8B", },

{ "Q5_K_M", LLAMA_FTYPE_MOSTLY_Q5_K_M, " 5.33G, +0.0569 ppl @ Llama-3-8B", },

{ "Q6_K", LLAMA_FTYPE_MOSTLY_Q6_K, " 6.14G, +0.0217 ppl @ Llama-3-8B", },

{ "Q8_0", LLAMA_FTYPE_MOSTLY_Q8_0, " 7.96G, +0.0026 ppl @ Llama-3-8B", },

{ "F16", LLAMA_FTYPE_MOSTLY_F16, "14.00G, +0.0020 ppl @ Mistral-7B", },

{ "BF16", LLAMA_FTYPE_MOSTLY_BF16, "14.00G, -0.0050 ppl @ Mistral-7B", },

{ "F32", LLAMA_FTYPE_ALL_F32, "26.00G @ 7B", },

选择 Q4_K_M 一类的量化类型,保持小巧,又不会太掉性能,可以根据自己的习惯来进行量化。

量化完成后可以选择再次验证

./build/bin/llama-quantize /data/models/models/AI-ModelScope/phi-2/phi-2.8B-2-F16.gguf Q4_K_M ./build/bin/llama-simple -m /data/models/models/AI-ModelScope/phi-2/ggml-model-Q4_K_M.gguf

main: decoded 17 tokens in 1.64 s, speed: 10.36 t/s

llama_perf_sampler_print: sampling time = 0.38 ms / 18 runs ( 0.02 ms per token, 47368.42 tokens per second)

llama_perf_context_print: load time = 5653.80 ms

llama_perf_context_print: prompt eval time = 112.59 ms / 4 tokens ( 28.15 ms per token, 35.53 tokens per second)

llama_perf_context_print: eval time = 1522.58 ms / 17 runs ( 89.56 ms per token, 11.17 tokens per second)

llama_perf_context_print: total time = 7181.82 ms / 21 tokens

对比量化前性能的提升非常明显。

2.6. Ollama 模型的构建

创建一个干净的目录,将刚刚在其他目录中量化好的模型放进来,创建一个 ollama 模型配置文件,方便后续的操作。

mkdir ollama cd ollama cp /data/models/models/AI-ModelScope/phi-2/ggml-model-Q4_K_M.gguf echo "FROM ./ggml-model-Q4_K_M.gguf" > Modelfile docker run -d --gpus=all -v `pwd`:/root/.ollama -p 11434:11434 --name ollama-llama3 ollama/ollama:0.5.2 docker exec -it ollama-llama3 bash ollama create custom_llama_3_1 -f ~/.ollama/Modelfile llama show custom_llama_3_1 du -hs ~/.ollama/models/

3. GGUF 量化模式说明

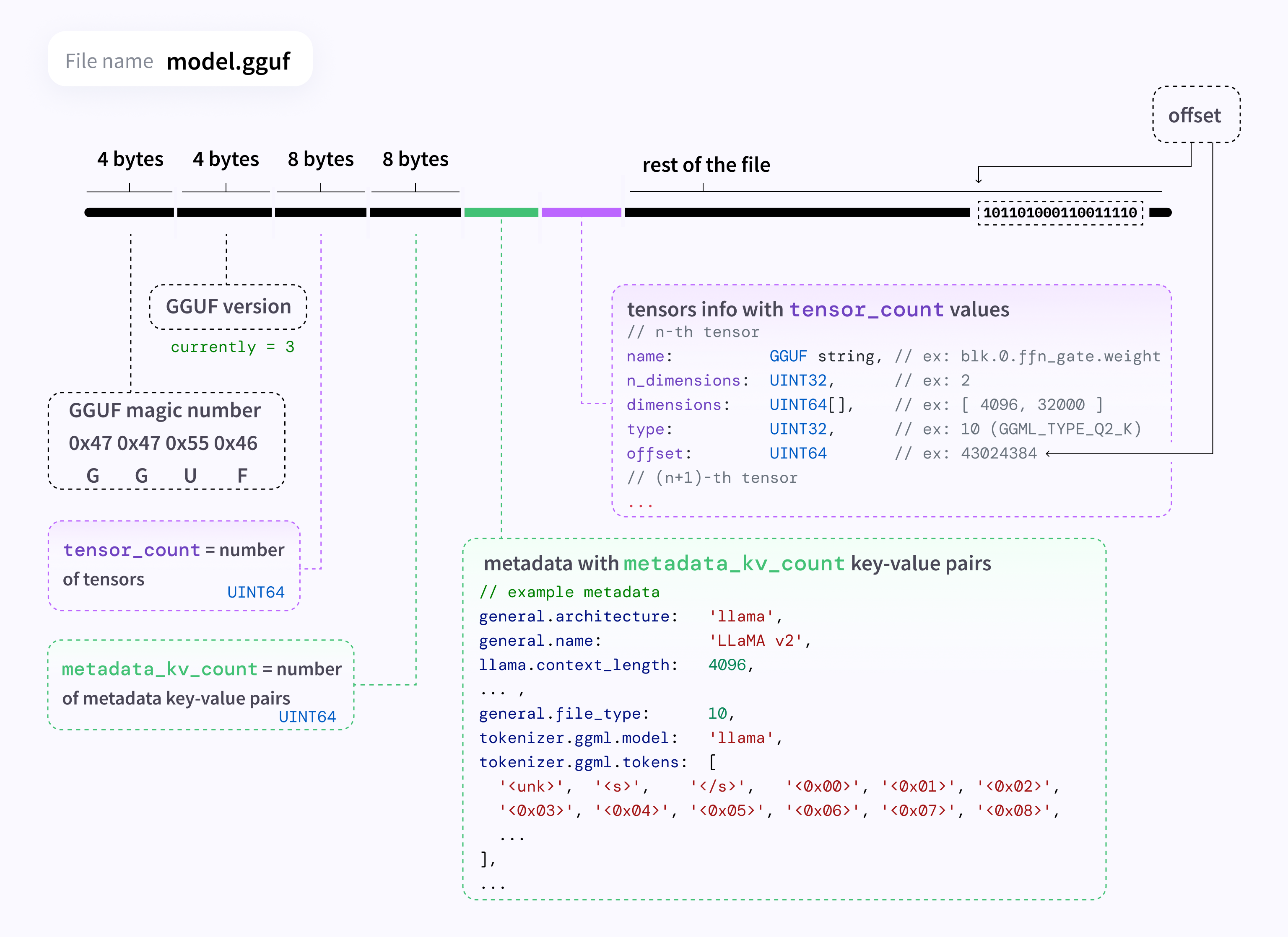

GGUF:一种二进制模型文件格式,前身是GGML,这里的 GG 前缀就是作者的名字缩写,这种格式优化了模型文件的读取和写入速度,而且包含元数据,与仅包含张量的文件格式(如safetensors )不同,GGUF文件是 All-in-one 的。

量化:量化是一种通过降低模型参数的表示精度来减少模型大小和计算需求的方法,比如,把单精度FP32转变为INT8来减少存储和计算成本。量化有非常多的计算方法,比如常见的线性(仿射)量化:公式是 r=S(q-Z) ,意思是将实数值r 映射为量化的整数值q,其中缩放因子 S 和零点Z是根据参数的分布统计计算出来的。

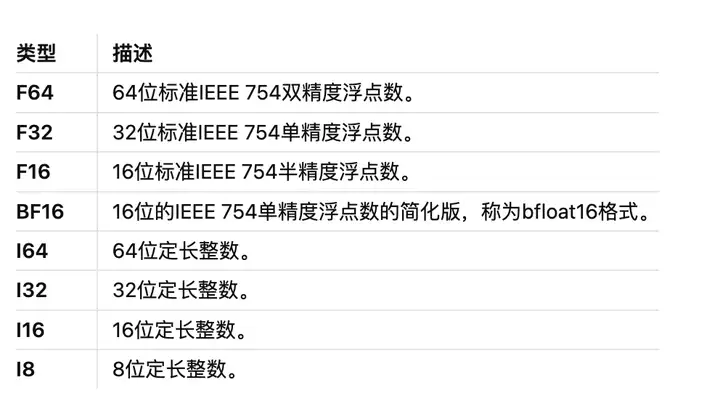

3.1. 基础数值类型

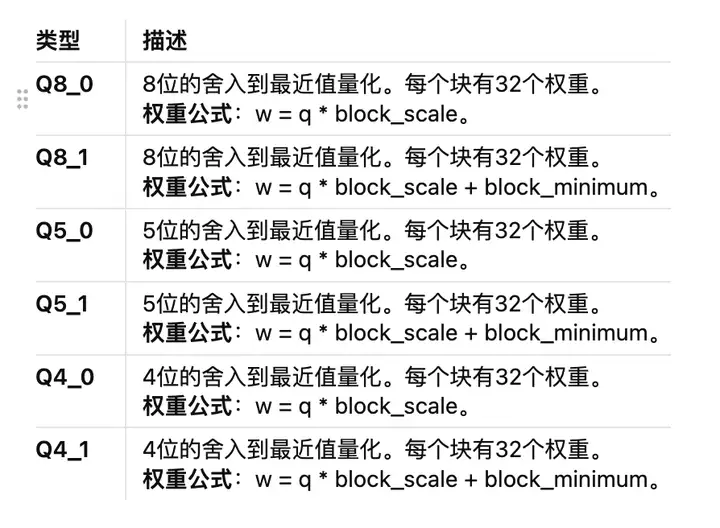

3.2. 传统量化类型

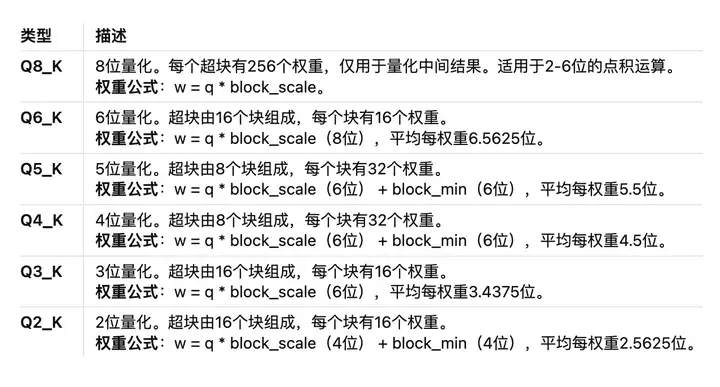

K 系列量化类型

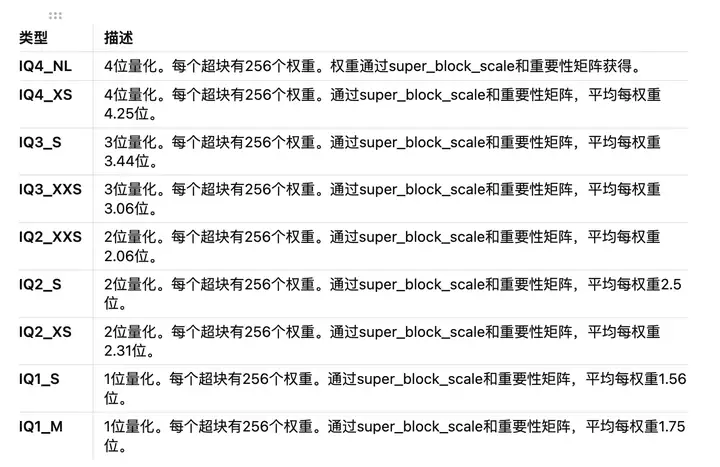

IQ 系列量化类型

4. 常见问题处理

4.1. 转换过程出现 NotImplementedError: BPE pre-tokenizer was not recognized 的错误提示

WARNING:hf-to-gguf:**************************************************************************************

WARNING:hf-to-gguf:** WARNING: The BPE pre-tokenizer was not recognized!

WARNING:hf-to-gguf:** There are 2 possible reasons for this:

WARNING:hf-to-gguf:** - the model has not been added to convert_hf_to_gguf_update.py yet

WARNING:hf-to-gguf:** - the pre-tokenization config has changed upstream

WARNING:hf-to-gguf:** Check your model files and convert_hf_to_gguf_update.py and update them accordingly.

WARNING:hf-to-gguf:** ref: https://github.com/ggml-org/llama.cpp/pull/6920

WARNING:hf-to-gguf:**

WARNING:hf-to-gguf:** chkhsh: b0f33aec525001c9de427a8f9958d1c8a3956f476bec64403680521281c032e2

WARNING:hf-to-gguf:**************************************************************************************

WARNING:hf-to-gguf:

问题原因:

该问题的具体原因参考 https://github.com/ggml-org/llama.cpp/issues/8649 记录

解决方案:

- 修改并添加 convert_hf_to_gguf_update.py 文件中对应模型的内容

- 保存后重新执行该脚本

- 无标签

添加评论